BFGS 算法

也即 Broyden-Fletcher-Goldfarb-Shanno 算法。参考 Wikipedia 。

基本原理

The search direction at stage $k$ is given by the Newton method:

$$p_k=-B_k\nabla f(x_k)$$

where $B_k$ is an approximation of the Hessian matrix, and $\nabla f(x_k)$ is the gradient of the function at $x_k$

The quasi-Newton condition imposed on the update of $B_k$ is:

$$B_{k+1}(x_{k+1}-x_k)=\nabla f(x_{k+1})-\nabla f(x_k)$$

Let $y_k=\nabla f(x_{k+1})-\nabla f(x_k)$ and $s_k=x_{k+1}-x_k$, then $B_{k+1}s_k=y_k$ which is the secant equation. The curvature condition $s_k^Ty_k>0$ should be satisfied.

In the BFGS method, instead of requiring the full Hessian matrix at $x_{k+1}$ to be computed, the approximate Hessian is given by:

$$B_{k+1}=B_k+\frac{y_ky_k^T}{y_k^Ts_k}-\frac{B_ks_ks_k^TB_k}{s_k^TB_ks_k}$$

$$B_{k+1}^{-1}=\left(I-\frac{s_ky_k^T}{y_k^Ts_k}\right)B_k^{-1}\left(I-\frac{y_ks_k^T}{y_k^Ts_k}\right)+\frac{s_ks_k^T}{y_k^Ts_k}$$

or

$$B_{k+1}^{-1}=B_k^{-1}+\frac{(s_k^Ty_k+y_k^TB_k^{-1}y_k)(s_ks_k^T)}{(s_k^Ty_k)^2}-\frac{B_k^{-1}y_ks_k^T+s_ky_k^TB_k^{-1}}{s_k^Ty_k}$$

算法步骤

From an initial guess $x_0$ and an approximate Hessian matrix $B_0$ (generally the identity matrix), the following steps are repeated as $x_k$ converges to the solution:

- Obtain a direction: $p_k=-B_k^{-1}\nabla f(x_k)$;

- Perform a line search to find an acceptable stepsize $\alpha_k$ in the direction found in the first step;

- Set $s_k=\alpha_kp_k$ and update $x_{k+1}=x_k+s_k$;

- $y_k=\nabla f(x_{k+1})-\nabla f(x_k)$;

- $B_{k+1}=B_k+\frac{y_ky_k^T}{y_k^Ts_k}-\frac{B_ks_ks_k^TB_k}{s_k^TB_ks_k}$.

固定点迭代法

固定点(Fixed point)

参考 Wikipedia https://en.wikipedia.org/wiki/Fixed_point_(mathematics) 。

Not to be confused with a stationary point where $f’(x)=0$ . In mathematics, $c$ is a fixed point of the function $f(x)$ if $f(c) = c$.

Points that come back to the same value after a finite number of iterations of the function are called periodic points. A fixed point is a periodic point with period equal to one.

固定点迭代

定义

参考 Wikipedia 。

In numerical analysis, given a function $f$ defined on the real number with real values and given a point $x_0$ in the domain of $f$, the fixed point iteration is

$$x_{n+1}=f(x_n), n=0,1,2,…$$

应用

Newton’s method for finding roots of a given differentiable function $f(x)$ is

$$x_{n+1}=x_n-\frac{f(x_n)}{f’(x_n)}$$

If we write $g(x)=x-\frac{f(x)}{f’(x)}$, we may rewrite the Newton iteration as

$$x_{n+1}=g(x_n)$$

If this iteration converges to a fixed point $x$ of $g$, then

$$x=g(x)=x-\frac{f(x)}{f’(x)}$$

so that

$$\frac{f(x)}{f’(x)}=0$$

The reciprocal of anything is nonzero, therefore $f(x)=0$: $x$ is a root of $f$.

非线性共轭梯度法

参考 Wikipedia 。

In numerical optimization, the nonlinear conjugate gradient method generalizes the conjugate gradient method to nonlinear optimization.

线性共轭梯度法

For a quadratic function

$$f(x)=||Ax-b||^2$$

the minimum of $f$ is obtained when the gradient $\nabla_x f=2A^T(Ax-b)=0$.

Linear conjugate gradient seeks a solution to the linear equation $A^T Ax=A^T b$.

非线性共轭梯度法

The nonlinear conjugate gradient method is generally used to find the local minimum of a nonlinear function using its gradient $\nabla_x f$ alone.

算法步骤

Given a function $f(x)$, one simply starts in the steepest descent direction:

$$\Delta x_0=-\nabla_x f(x_0)$$

with an step length $\alpha$, and preforms a line search:

$$\alpha_0:=\text{argmin}_\alpha f(x_0+\alpha\Delta x_0)$$

$$x_1=x_0+\alpha_0\Delta x_0$$

After the first iteration in $\Delta x_0$, the following steps continue along a subsequent conjugate direction $s_n$, where $s_0=\Delta x_0$:

- Calculate the steepest direction: $\Delta x_n=-\nabla_x f(x_n)$;

- Compute $\beta_n$ according to one of the formulas below;

- Update the conjugate direction: $s_n=\Delta x_n+\beta_n s_{n-1}$;

- Perform a line search: optimize $\alpha_n=\text{argmin}_\alpha f(x_n+\alpha s_n)$;

- Update: $x_{n+1}=x_n+\alpha_n s_n$.

Subsequent search directions lose conjugacy requiring the search direction to be reset to the steepest descent direction at least every $N$ iterations, or sooner if progress stops.

$\beta_n$ 的计算

Four of the best known formulas for $\beta_n$ are named after their developers:

- Fletcher-Reeves: $\beta_n^{FR}=\frac{\Delta x_n^T \Delta x_n}{\Delta x_{n-1}^T \Delta x_{n-1}}$;

- Polak-Ribiere: $\beta_n^{PR}=\frac{\Delta x_n^T (\Delta x_n-\Delta x_{n-1})}{\Delta x_{n-1}^T \Delta x_{n-1}}$;

- Hestenes-Stiefel: $\beta_n^{HS}=-\frac{\Delta x_n^T (\Delta x_n-\Delta x_{n-1})}{s_{n-1}^T (\Delta x_n-\Delta x_{n-1})}$;

- Dai-Yuan: $\beta_n^{DY}=-\frac{\Delta x_n^T \Delta x_n}{s_{n-1}^T (\Delta x_n-\Delta x_{n-1})}$.

A popular choice is $\beta=\max\lbrace 0,\beta^{PR}\rbrace$, which provides a direction reset automatically.

邻域算法

邻域算法(Neighborhood Algorithm)是由 (Sambridge, 1999) 引入到地球物理反演的应用中的。在该文中,作者发出了灵魂拷问:

How can a search for new models be best guided by all previous models for which the forward problem has been solved (and hence the data-misfit value evaluated) ?

邻域算法采用简单的几何概念指导参数空间中的模型搜索,是一种非线性的无导数反演方法 (Peyrat and Olsen, 2004) 。

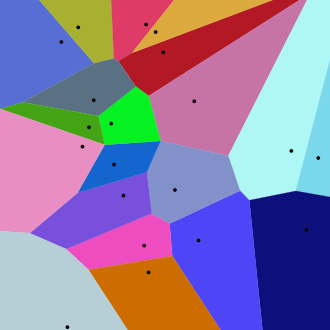

Voronoi 图

参考 Wikipedia 。

In mathematics, a Voronoi diagram is a partition of a plane into regions close to each of a given set of objects.

Let $X$ be a metric space with distance function $d$. Let $K$ be a set of indices and let $(P_k)_{k \in K}$ be a tuple (ordered collection) of nonempty subsets (the sites) in the space $X$. The Voronoi cell, or Voronoi region, $R_k$, associated with the site $P_k$ is the set of all points in $X$ whose distance to $P_k$ is not greater than their distance to the other sites $P_j$, where $j$ is any index different from $k$:

$$ R_k = { x \in X \vert d(x, P_k) \leq d(x, P_j) \text{ for all } j \neq k } $$

算法步骤

The Neighborhood Algorithm can be summarized as follows (Sambridge, 1999):

- Generate an initinal set of $n_s$ models uniformly in parameter space;

- Calculate the misfit function for the most recently generated set of $n_s$ models, and determine the $n_r$ models with the lowest misfit of all models generated so far;

- Generate $n_s$ new models by perfoming a uniform random walk in the Voronoi cell of each of the $n_r$ chosen models (i.e., $n_s/n_r$ samples in each cell);

- Go to step 2.

首先,在一个预定义的模型空间中随机生成 $n_s$ 个模型(即 Voronoi 中心),并计算出这些模型的目标函数值。接着,根据定义的距离测量公式构建出 Voronoi 元胞(Voronoi cell),赋予元胞中每个点与 Voronoi 中心相同的目标函数值。在这个过程中,我们实质上生成了一组等目标函数面。从目前生成的这 $n_s$ 个模型中,选出误差最小的 $n_r$ 个模型,去掉剩下的 $(n_s - n_r)$ 个模型。现在,在这 $n_r$ 个 Voronoi 元胞中,每个元胞生成 $n_s/n_r$ 个新模型,总共产生 $n_s$ 个新模型。再次,选出 $n_r$ 个误差最小的模型,去掉剩下的 $(n_s - n_r)$ 个模型。重复以上过程直到满足预定义的收敛条件。(本段译自:Global Optimization Methods in Geophysical Inversion,第二版,6.1.3 小节)

算法优点

According to the section 6.1.3 of Global Optimization Methods in Geophysical Inversion (2nd edition, Sen and Stoffa, 2013), following are the advantages of the Neighborhood Algorithm:

- It uses previous smaples to determine the next step of the search, or in other words, the algorithm is self-adaptive.

- The algorithm requires only two tuning parameters, namely, $n_s$ and $n_r$.

- The process generates an ensemble of models with low misfit values; thus the method can be used for uncertainty quantification as well.

根据 (Peyrat and Olsen, 2004):(1)在迭代中,新模型只在上一步误差最小的若干个模型的 Voronoi 元胞中产生,因此邻域算法比蒙特卡罗算法具有更少的计算消耗;(2)与遗传算法和模拟退火算法相比,在模型搜索中邻域算法仅需要两个控制参数,需要更少的主观“指导”。

Householder 方法

参考 Wikipedia。

概述

In mathematics, Householder’s methods are a class of root-finding algorithms that are used for functions of one real variable with continuous derivatives up to some order $d + 1$. Each of these methods is characterized by the number $d$, which is known as the order of the method. The algorithm is iterative and has a rate of convergence of order $d + 1$.

方法论

For solving the nonlinear equation $f(x) = 0$, Householder’s method consists of a sequence of iterations:

$$ x_{n + 1} = x_n + d \frac{ (1/f)^{(d - 1)} (x_n) }{ (1/f)^{(d)} (x_n) } $$

beginning with an initial guess $x_0$.

低阶方法

一阶:Newton 方法

Since

$$ (1/f)(x) = \frac{1}{f(x)} \quad\text{ and }\quad (1/f)’(x) = - \frac{f’(x)}{f(x)^2} $$

result in

$$ x_{n + 1} = x_n + 1 \cdot \frac{(1/f)(x_n)}{(1/f)’ (x_n)} = x_n - \frac{f(x_n)}{f’(x_n)} $$

二阶:Halley 方法

Since

$$ (1/f)’(x) = - \frac{f’(x)}{f(x)^2} \quad\text{ and }\quad (1/f)’’(x) = - \frac{f’’(x)}{f(x)^2} + 2 \cdot \frac{f’(x)^2}{f(x)^3} $$

result in

$$ x_{n + 1} = x_n + 2 \cdot \frac{ (1/f)’ (x_n) }{ (1/f)’’ (x_n) } = x_n - \frac{ 2 f(x_n) f’(x_n) }{ 2 f’(x_n)^2 - f(x_n) f’’(x_n) } $$

The following alternative formulation shows the similarity between Halley’s method and Newton’s method. The expression $f(x_n)/f’(x_n)$ is computed only once, and it is particularly useful when $f’’(x_n)/f’(x_n)$ can be simplified:

$$ x_{n + 1} = x_n - \frac{f(x_n)}{f’(x_n)} \left[ 1 - \frac{f(x_n)}{f’(x_n)} \cdot \frac{f’’(x_n)}{2 f’(x_n)} \right]^{ - 1} $$

When the second derivative is very close to zero, the Halley’s method iteration is almost the same as the Newton’s method iteration.